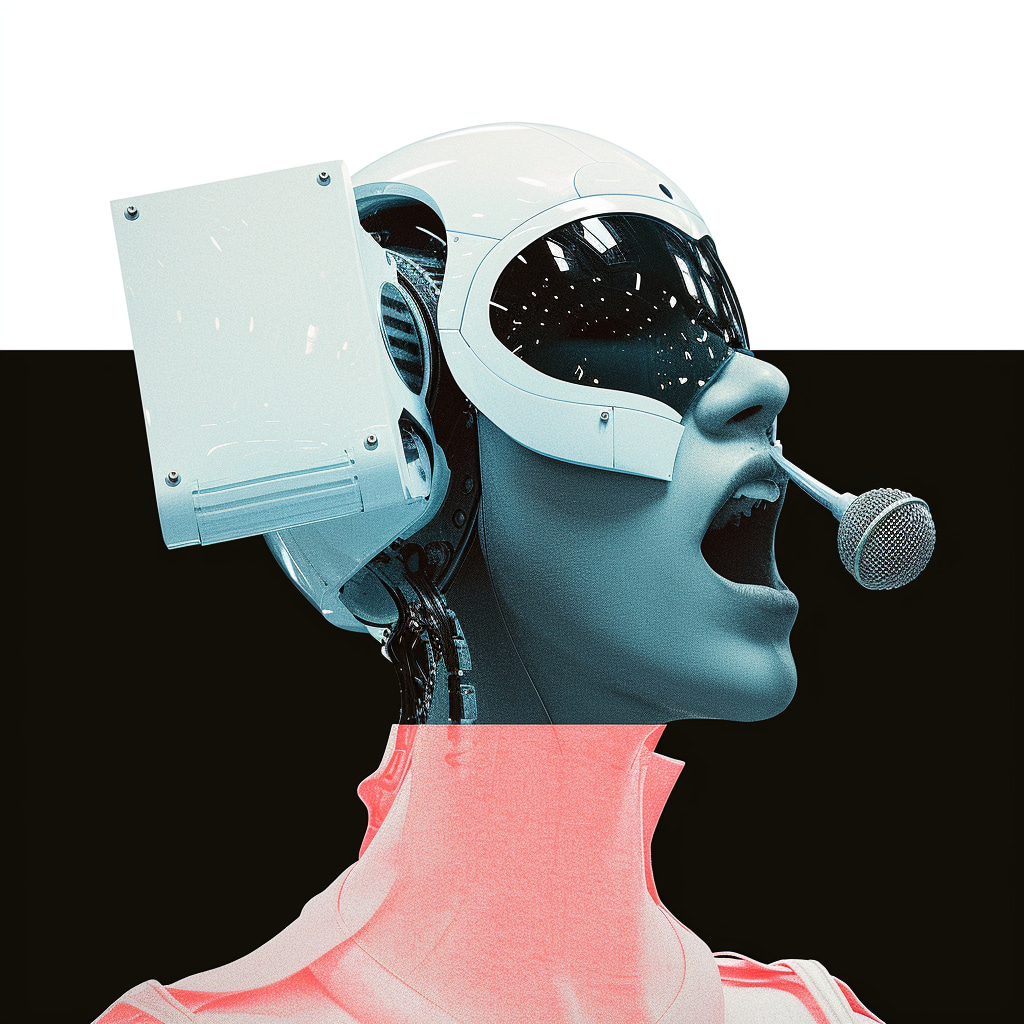

While the doomsday scenarios of killer robots and job displacement are quick to grab headlines, the subtler erosion of free speech through AI-powered censorship is more or less a real and pressing concern that needs to be given attention, too.

In some countries like the United States and the United Kingdom, there are traditional free speech protections that safeguard against “prior restraint.”

Such laws essentially prevent the government from blocking speech before it’s uttered. In simpler terms, a newspaper shouldn’t be shut down for an upcoming story, even if the government deems it controversial. However, the digital age complicates this principle.

How AI Threatens the Bedrock of Free Speech

Today, social media platforms dominate our communication, becoming de facto gatekeepers of online discourse. This creates a new battleground for free speech, where AI-powered filters, employed by both governments and tech companies, can easily censor expression at scale before it ever reaches the public eye.

The vast volume of online content published across several social media platforms every minute makes manual moderation impractical, which could push platforms towards AI-powered filters – which offer a fast, cost-effective alternative. Yet, this efficiency comes at a steep price.

Automated filters lack the human touch and the ability to apply context, cultural understanding, and critical thinking, raising concerns about the algorithms’ potential for over-censorship and lack of nuance in differentiating protected expression from harmful content.

The Chilling Reality of AI-Powered Free Speech Erosion

The consequences are alarming, from marginalized voices silenced to dissenting opinions squashed and legitimate perspectives deemed unfit for public consumption.

The UK’s Online Safety Act and proposed upload filtering schemes in the US and Europe could encourage more platforms to turn to AI as an effective tool for censorship. While aiming to combat harmful content, these measures risk creating online echo chambers where only approved narratives flourish.

The potential harm extends beyond content suppression. Automated systems, shrouded in algorithmic secrecy, operate with little transparency or accountability. Who then shall be held responsible for the biases and errors? Where can individuals seek redress when their voices are unfairly silenced?

From Zero to Web3 Pro: Your 90-Day Career Launch Plan

Killer Robots Don't Exist, But This AI Threat is Very Real

Killer Robots Don't Exist, But This AI Threat is Very Real