If intent is wrong, then artificial intelligence can be a lethal tool in the hands of the wrong person. Imagine you are on night duty and you get a call from your home telling you that there is a man standing at your door with a gun in his hands. And your entire family is inside your home. What will be your feelings, and who will you prefer them to be? A criminal or a police officer?

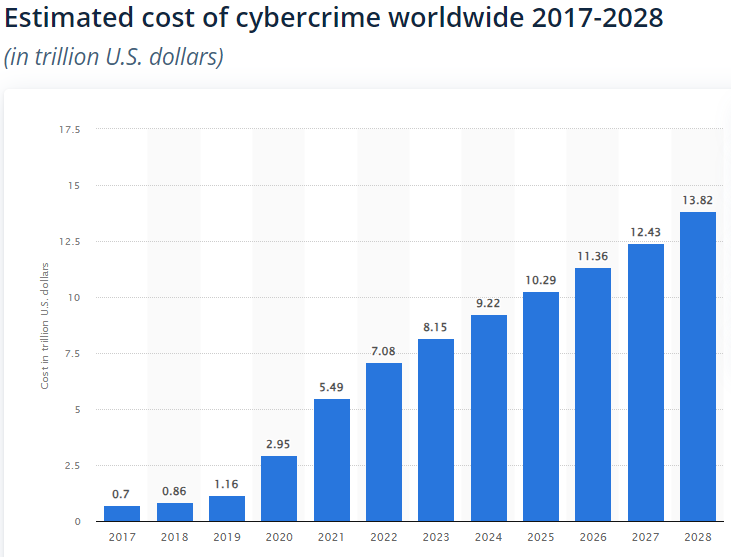

The same is the case with AI, which is already knocking at our doors, rather, has entered our homes. We all know what ChatGPT is, right? But there is a dark side to the very same AI used for ChatGPT, which is more sinister than we think of it. Welcome to the dark web. There are numerous tools of artificial intelligence similar to ChatGPT but made to cater to the heinous side of the market. Tools like BadGPT and FraudGPT, to name a few, According to experts, cybercrime, specifically email phishing, will keep rising in the coming years.

What actually are BadGPTs and the like?

These are basically chatbots powered by AI, but not the ones you use daily for your routine tasks. These are uncensored modes without any safeguards in place to control them, and are available to customers who want to use them as per their own needs. They are usually used for phishing emails or developing malware for cyber attacks to help hackers and ease their criminal activities. Businesses are on a high alert and at greater risk than ever before with the advent of these services.

Recently, researchers at Indiana University discovered more than 200 hacking services that are using large language models, aka AI, and are already on the market for every one. The first of these services appeared around the starting months of 2023, and after that, there was no looking back as these services kept on sprouting like wild grass.

Most of these tools catering to the dark market are using open-source AI models or cracked versions of the likes of Anthropic. A technique called prompt injection is used to hijack these models and bypass their safety filters so that hackers can utilize them without any safety checks.

An OpenAI spokesperson said that their company tries their best to safeguard their products from use for any malicious purposes and that it is,

“always working on how we can make our systems more robust against this type of abuse.”

Source: WSJ

The negative impact of BadGPT and how to prevent yourself

You might be thinking if these tactics are really effective and can they really convince someone. The reality is that fraudulent emails written with these tools are hard to spot, and hackers train their models on detection techniques gained from cybersecurity softwares.

Recently, in an interview with govttech, Jeff Brown, CISO (chief information security officer) for the state of Connecticut, expressed his worries regarding BadGPT and FraudGpt being used in cybercrimes and said there is an uptick in email-based fraud by a good margin.

Individuals can ward off these attacks by being extra careful when sharing their personal information with anyone. Don’t share your information openly, whether with a person or on any website, unless very necessary, and that too with caution. Always first investigate the website before disclosing your info as malicious sites now look much professional without a hint of being malicious.

A Step-By-Step System To Launching Your Web3 Career and Landing High-Paying Crypto Jobs in 90 Days.