With the rise of generative artificial intelligence tools, the proliferation of deceptive pictures, videos, and audio, commonly known as deepfakes, has become a significant concern online. From celebrities like Taylor Swift to political figures such as Donald Trump, deepfake content is blurring the lines between reality and fiction, posing serious threats ranging from scams to election manipulation.

Signs of AI manipulation

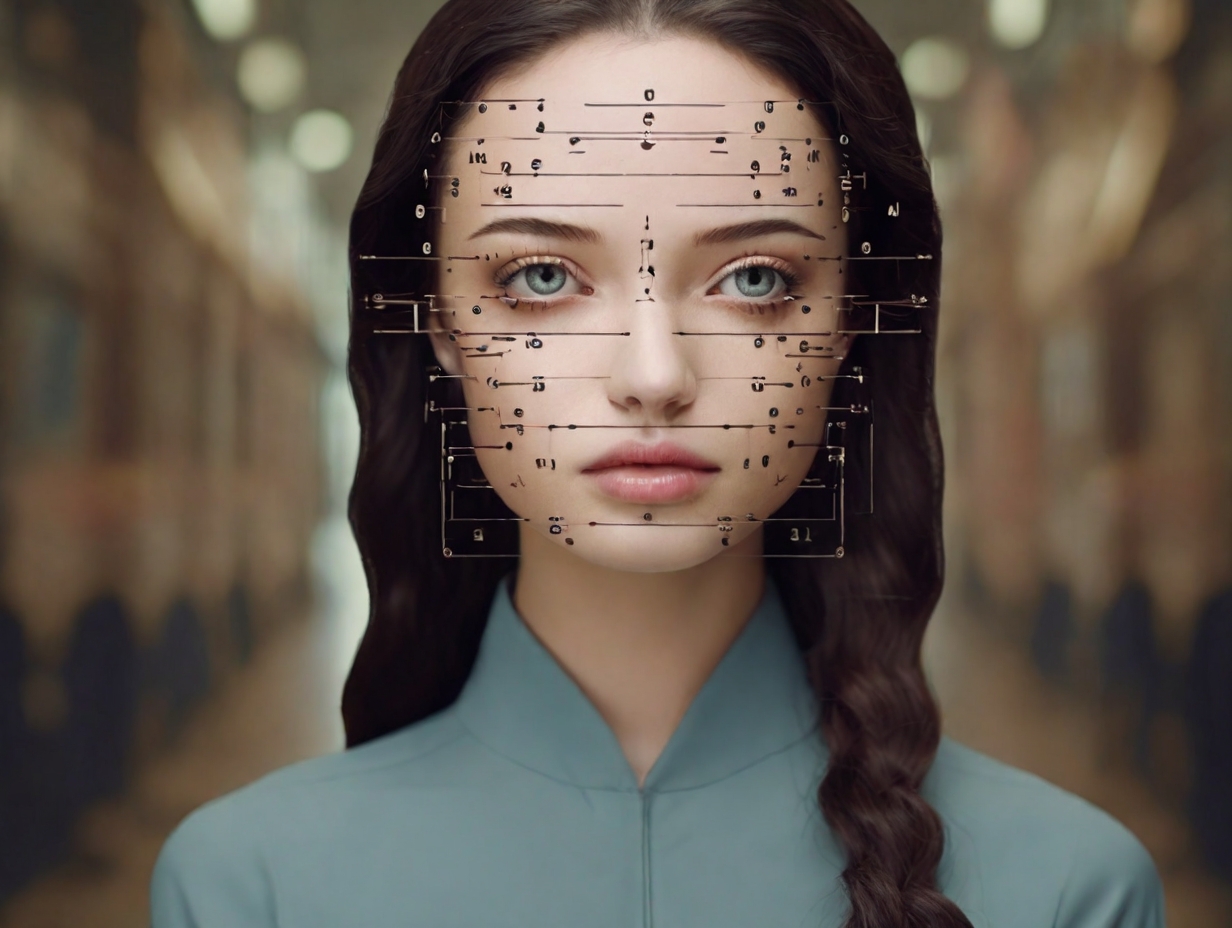

In the past, imperfect deepfake technology left behind telltale signs of manipulation, such as unnatural features or errors. However, advancements in AI have made detecting deepfakes more challenging. Despite this, there are still some indicators to watch for:

Electronic Sheen: Many AI-generated images exhibit an unnatural “smoothing effect” on the skin, giving it a polished appearance.

Inconsistencies in Lighting and Shadows: Pay attention to discrepancies between the subject and the background, particularly in lighting and shadow consistency.

Facial Features: Deepfake face-swapping often results in mismatches in facial skin tone or blurry edges around the face.

Lip Syncing: Observe whether lip movements align perfectly with the video audio.

Teeth Detail: Deepfake algorithms may struggle to accurately render individual teeth, leading to blurry or inconsistent representations.

Context matters

Considering the plausibility of the content is crucial. If a public figure appears to behave in a manner inconsistent with their character or reality, it could be a red flag for a deepfake.

While AI is being used to create deepfakes, it can also be employed to combat them. Companies like Microsoft and Intel have developed tools to analyze photos and videos for signs of manipulation. Microsoft’s authenticator tool provides a confidence score on media authenticity, while Intel’s FakeCatcher uses pixel analysis algorithms to detect alterations.

Challenges and limitations

Despite advancements in detection technology, the rapid evolution of AI presents challenges. What may be effective today could be outdated tomorrow, emphasizing the need for ongoing vigilance. Moreover, relying solely on detection tools can create a false sense of security, as their efficacy may vary, and the arms race between creators and detectors continues.

As deepfake technology evolves, so must our detection and mitigation strategies. While there are signs to look for and tools available for analysis, vigilance remains paramount. Recognizing the potential dangers deepfakes pose, individuals and organizations must stay informed and adapt to the evolving threat landscape.

In an age where AI-driven deception is rampant, education, skepticism, and technological innovation are our best defenses against the proliferation of fake content online.

From Zero to Web3 Pro: Your 90-Day Career Launch Plan