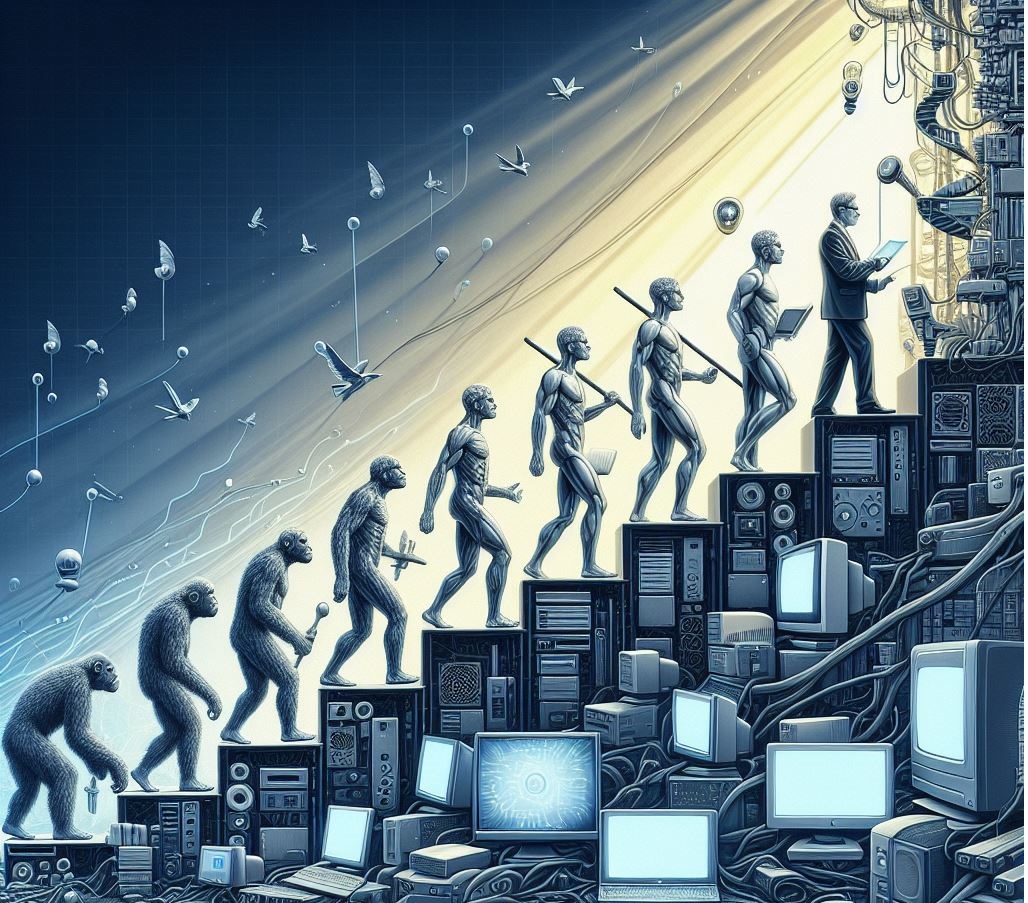

In the realm of artificial intelligence (AI), a significant breakthrough is underway with the emergence of Large Multimodal Models (LMMs), marking a shift from unimodal to multimodal learning. This evolution represents a pivotal moment in AI research and development, as LMMs integrate various data modalities, including text, images, and audio, into a unified framework. As AI endeavors to emulate human-like capabilities, the adoption of multimodal models is paramount. This story delves into the trajectory of LMMs, their applications across industries, and the future implications of this transformative technology.

From Unimodal to Large Multimodal Models

Large Multimodal Models (LMMs) signify a departure from traditional unimodal systems, wherein AI operated within singular data modes. By incorporating multiple modalities, LMMs offer a more comprehensive understanding of the world akin to human intelligence. This paradigm shift has profound implications for various domains, including language processing, computer vision, and audio recognition. LMMs enable seamless interaction through diverse mediums such as text input, voice commands, and image processing. Notably, applications like assisting visually impaired individuals in web browsing underscore the practical significance of multimodal AI.

LMMs exemplify a significant advancement in AI’s ability to process and comprehend multimodal data. Unlike unimodal models, which are limited to processing data within a single modality, LMMs possess the capability to analyze and interpret information from various sources simultaneously. This holistic approach not only enhances AI’s understanding of complex real-world scenarios but also opens doors to innovative applications across industries.

Versatility and application of LMMs

The versatility of Large Multimodal Models (LMMs) extends across industries, empowering diverse applications that were previously inaccessible. Sectors such as healthcare, robotics, e-commerce, and gaming stand to benefit significantly from the integration of multimodal capabilities. By amalgamating data from different modalities, LMMs enhance performance and yield more informed insights. For instance, in healthcare, LMMs can analyze medical images alongside textual reports, facilitating accurate diagnosis and treatment planning.

The integration of Large Multimodal Models (LMMs) within e-commerce platforms revolutionizes the customer experience by providing personalized recommendations based on both textual descriptions and visual attributes of products. This convergence of data modalities enables more accurate and tailored suggestions, thereby enhancing user satisfaction and driving business growth.

Future Prospects of LLMs

While still in its nascent stage, multimodal AI holds immense promise for the future of artificial intelligence. The convergence of language understanding, computer vision, and audio processing within a single framework heralds a new era of machine comprehension. As Large Multimodal Models (LMMs) continue to evolve, they are poised to bridge the gap between human perception and machine understanding. Looking ahead, the integration of multimodal capabilities is expected to revolutionize various facets of society, from personalized assistance to enhanced decision-making processes.

The development of Large Multimodal Models (LMMs) represents a significant milestone in AI’s journey towards achieving human-level understanding and interaction. By leveraging multimodal data, LMMs can discern intricate patterns and correlations that would otherwise remain undetected by unimodal systems. This holistic approach not only enhances AI’s ability to interpret real-world phenomena but also fosters a deeper integration between humans and machines, paving the way for more symbiotic relationships in various domains.

As Large Multimodal Models (LMMs) pave the way for a more integrated approach to artificial intelligence, one cannot help but wonder: What new horizons will be unlocked as multimodal AI continues to advance, and how will it shape the future landscape of human-machine interaction? The journey towards enhanced multimodal AI capabilities is an exciting frontier, promising transformative advancements that will redefine the boundaries of technological innovation and human collaboration.

Land a High-Paying Web3 Job in 90 Days: The Ultimate Roadmap