The killer robots are already on the battlefield and are proving themselves rather destructive weapons. We should be worried – in fact very worried. As they have proved their deadly potential in wars in Gaza and Ukraine, the consequences could be devastating if they are handled with carelessness. On April 3, +972 magazine, an Israeli publication published a report on Israel’s use of AI for identifying targets in Gaza.

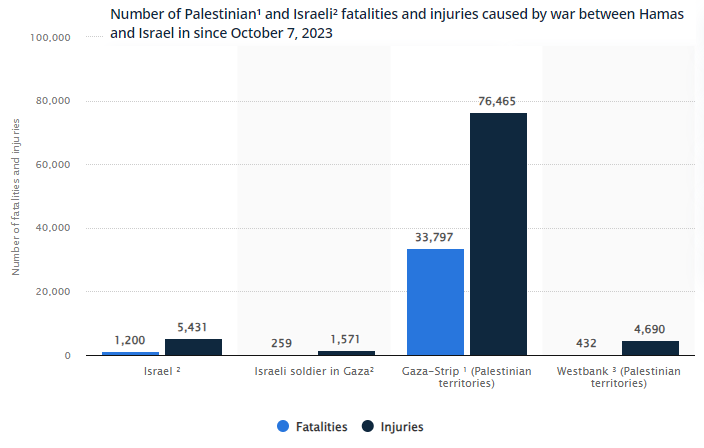

The AI system is called Lavender and is said that it curated kill lists of suspected Hamas personnel including the ones working in lower ranks. We have no idea how correct the lists were, but the most concerning fact is that it was used to target the militants even if they were in their homes with their families and kids and many civilians around. The chart below shows number of casualties and injuries till April 15.

Algorithms are telling whom to target

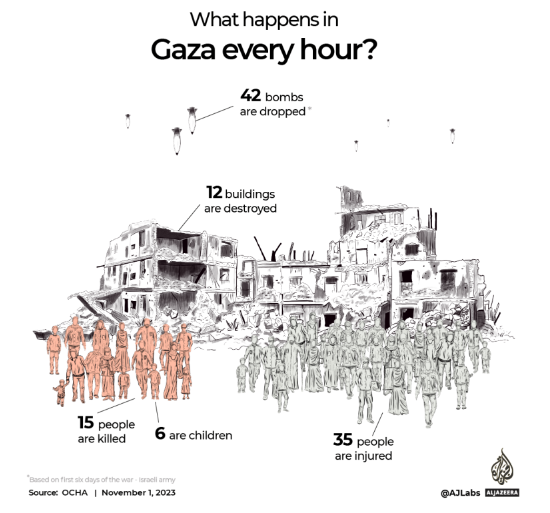

As is evident from the high number of civilian deaths and massive scale of destruction of infrastructure after Israel’s invasion of Gaza, the report caused serious concerns around humanitarian societies, government officials, and every sensible individual around the globe and the US. Antonio Guterres, Secretary General of the United Nations, said that he was “deeply troubled” and John Kirby, spokesperson of the White House said that “US was reviewing the report closely.”

Guterres showed more concern for the casualties and said,

“No part of life and death decisions which impact entire families should be delegated to the cold calculation of algorithms.”

Source: Worldpoliticsreview.

The report was published after the incident in which Israel targeted and killed seven Western aid workers and some of them were ex-British military people. This of course stirred judges and lawyers around Britain to stop arms sales to Israel due to its war crimes and serious violations of international law.

Another dimension of thinking about killer robots

The Campaign to Stop Killer Robots showed its concerns but said it’s not what they were campaigning against since 2012, as according to them it’s a data processing system, and the final decision to fire remains in human hands, but one of their statements provides another dimension to think about. The statement came after the publication of the news, it said,

“Lack of meaningful human control and engagement involved in the decisions to attack recommended targets.”

Source: Worldpoliticsreview.

This statement uses the word “lack of meaningful human control,” which adds another layer of complexity to the already condemned artificial intelligence and killer robots, and also provides campaigners a new concern to advocate for.

Artificial intelligence is usually portrayed as that it may one day take over the planet and cause catastrophic harm, even human extinction. But a more concerning fact is that the forces using them will use less caution while targeting, it will make them less accountable which will result in them making unethical decisions in wars as we have seen in Gaza already. So at the end of the day, it is not about robots making decisions against humans, but humans making robotic decisions without using their own cognitive abilities to the full extent in such sensitive life and death situations. As the report mentions a source saying,

“The machine did it coldly.”

Source: +972mag.

There is also another angle to it, this can corrupt the ability of programmers to communicate effectively with AI systems which will end up in war crimes.

The original news story can be seen here.

Land a High-Paying Web3 Job in 90 Days: The Ultimate Roadmap