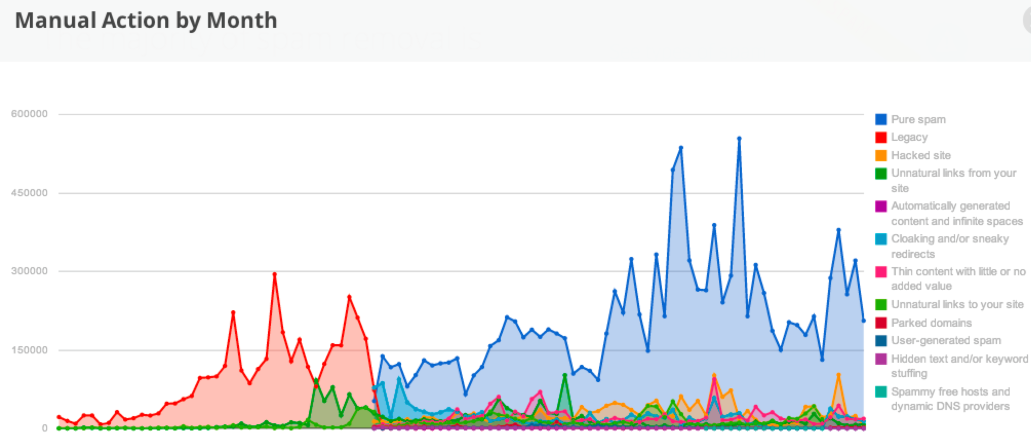

The spam, as we know it, has been with us from the very start. The content span before ChatGPT was nearly two percent of search results, but now the dynamics have changed heavily. Robo-spam, or AI generated spam, is everywhere, and now it counts for ten percent of results.

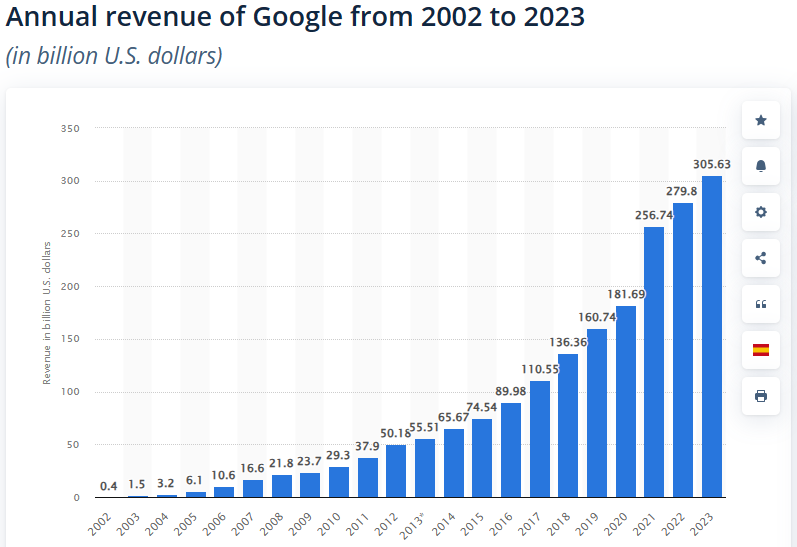

It’s not the clickbait content only, but AI, as it has a much better chance of passing through many layers and is very easy and cheaper to produce. Google is now delisting sites manually, and it has its own consequences as well, because Google also loses revenue from ads. Now one might think, then why is Google spending its own money to delist these sites? Well, it’s a game of revenue again.

AI spam is a clear threat to Google

There is a big chance that this cheap content may push users away from Google, as they are already tired of pre-AI clickbait trash in sponsored search results. The Web was once a place to discover things outside of big names, and now this AI spam has become a threat to the entire web. Filtering and stopping this type of content is difficult.

Removing AI and AI spammers could be one solution by automating the process of identifying the cheaters, but there are problems with that also. The first pain in the head is that AI is still developing, and its generated content will get more polished, so winning against it is not easy as it can develop traits to bypass an automated system.

Another problem is its cost, as it is resource intensive and requires massive sums of revenue, like going to a high roller table of a casino while drunk and may end up sitting on the moon alone. Remember the bold claim of a billionaire? Bill Gates promised that the world would get rid of spam by 2006, but even a billionaire can’t get it right.

The situation is worse for all

The situation is not good for Google and others who are looking to integrate AI in search results and the visitors’ frontend. Why? Because the system will have to be trained on data that is already contaminated to extremes, and again, the problem of revenue distribution. There is still no clear idea how this will work out, as the result will be in the form of a short report rather than a links list. And we know that Google earns when we click on the links, not when we read text on its webpage.

So how will be ads integrated then? May be in the written short report, but that will take away the share of revenue from the content provider and make them suffer. It will not be a winning situation for anyone.

Google started its journey with a good set of algorithms and a clean, perfect site. They knew what good sites are, their structure, and other components. The sites got more revenue and exposure who had better quality, and Google also earned more from them. But playing a fair game is usually not easy, so there are many who want to cheat the system. In this case, the revenue is shared by the cheaters and, of course, Google. It gave Google and the like the resources to cheat the entire system for their own benefit, but not a fair situation for others.

So if AI cheats Google, then what are the options? Sites are required to put up a privacy statement upfront for cookies and stuff, now they must have a quality assurance statement as well. For example, flags for AI content, marketing, or ownership. But the first thing is how honest are these giants in following their own rules. They will have to prioritize good content, and adhere to the policies, block cheaters if found guilty, and never show them to the users again.

The original story can be seen here.

Land a High-Paying Web3 Job in 90 Days: The Ultimate Roadmap