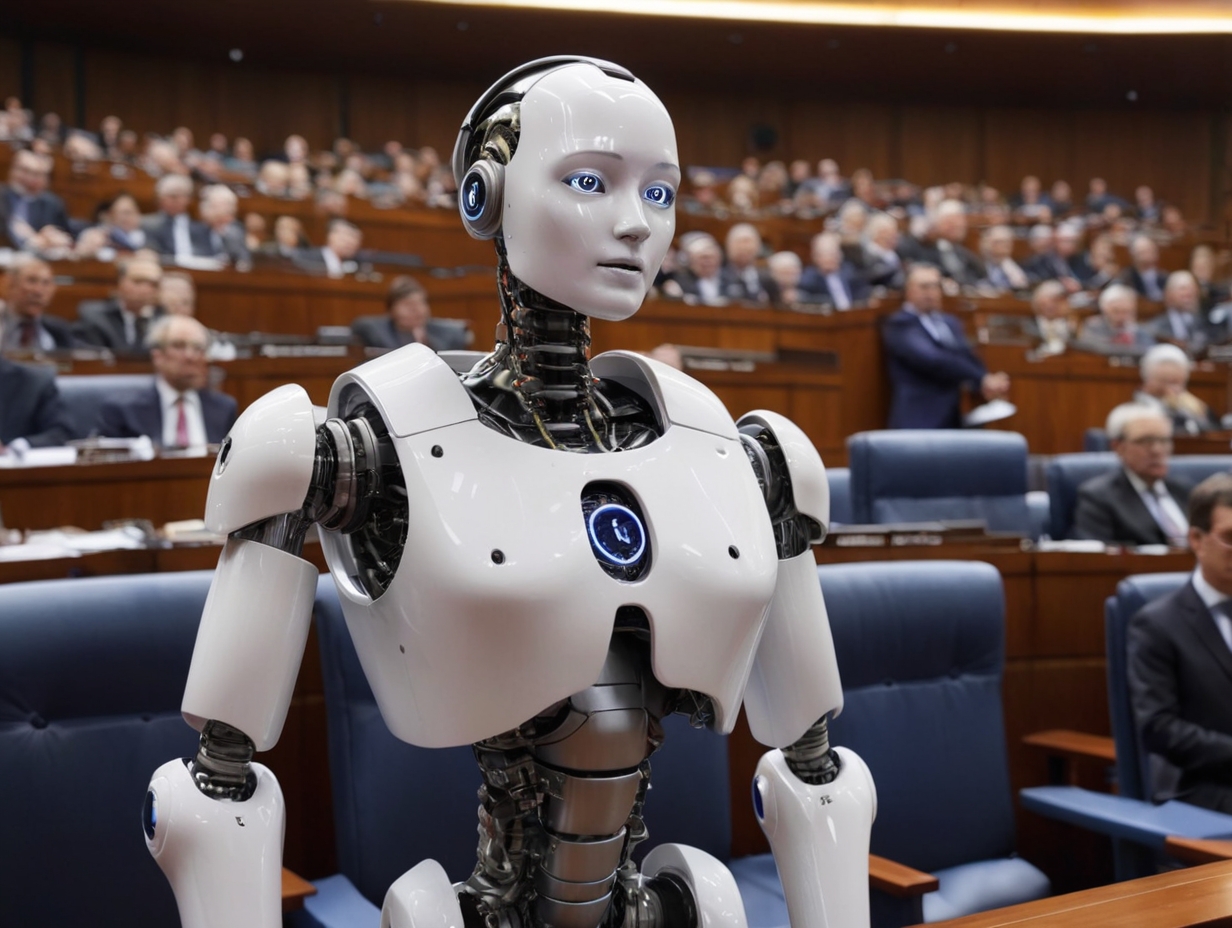

The European Union has taken a significant step towards regulating the rapidly evolving field of artificial intelligence (AI) by giving final approval to the Artificial Intelligence Act, a groundbreaking legislation that aims to establish a comprehensive framework for the development and use of AI systems within the 27-nation bloc.

The AI Act, which has been in the works for five years, was overwhelmingly approved by members of the European Parliament, marking a pivotal moment in the quest to ensure that AI technology is developed and deployed in a responsible and human-centric manner.

A global signpost

The Artificial Intelligence Act is expected to serve as a global signpost for other governments grappling with the challenge of regulating AI technology. Dragos Tudorache, a Romanian legislator who was a co-leader of the Parliament negotiations on the draft law, emphasized the significance of the legislation, stating, “The AI Act has nudged the future of AI in a human-centric direction, in a direction where humans are in control of the technology and where it – the technology – helps us leverage new discoveries, economic growth, societal progress and unlock human potential.”

Risk-based approach

The AI Act takes a risk-based approach to regulating AI systems, with the level of scrutiny increasing proportionally to the potential risks posed by the technology. Low-risk systems, such as content recommendation systems or spam filters, will face lighter rules, primarily requiring transparency about the use of AI.

On the other hand, high-risk applications of AI, including medical devices and critical infrastructure systems, will face stricter requirements, such as the use of high-quality data and the provision of clear information to users.

Certain AI uses deemed to pose an unacceptable risk are outright banned under the new legislation. These include social scoring systems that govern human behavior, some types of predictive policing, and emotion recognition systems in schools and workplaces. Additionally, the law prohibits law enforcement from using AI-powered remote “biometric identification” systems in public spaces, except in cases of serious crimes like kidnapping or terrorism.

The regulation also addresses the astonishing rise of general-purpose AI models, such as OpenAI’s ChatGPT, by introducing provisions for their oversight. Developers of these models will be required to provide detailed summaries of the data used for training, follow EU copyright law, and label AI-generated deepfakes as artificially manipulated.

Addressing Systemic Risks

Recognizing the potential for powerful AI models to pose “systemic risks,” the AI Act imposes additional scrutiny on the largest and most advanced systems, including OpenAI’s GPT4 and Google’s Gemini. Companies providing these systems will need to assess and mitigate risks, report serious incidents, implement cybersecurity measures, and disclose energy consumption data.

The EU’s concerns stem from the potential for these powerful AI systems to cause serious accidents, be misused for cyber attacks, or spread harmful biases across numerous applications, affecting a large number of people.

While the AI Act aims to establish a framework for responsible AI development, it also seeks to promote innovation within the EU. Big tech companies have generally supported the need for regulation while lobbying to ensure that the rules work in their favor.

OpenAI’s CEO, Sam Altman, initially caused a stir by suggesting that the company could pull out of Europe if it cannot comply with the AI Act, but he later backtracked, stating that there were no plans to leave.

As the world grapples with the rapid advancement of AI technology, the European Union’s Artificial Intelligence Act represents a pioneering effort to strike a balance between fostering innovation and addressing the potential risks and challenges posed by this transformative technology.

From Zero to Web3 Pro: Your 90-Day Career Launch Plan