There is a darker side to artificial intelligence that is often discussed but less cared for, and that is none other than its climate cost.

Despite all the excitement and potential that AI has, it requires huge amounts of energy to train AI models, and even when you use it to write text or create images with it, it still requires sufficient energy to function. These processes result in the release of substantial amounts of carbon, depending on where the data center is located and where the AI model operates.

Bigger models need more energy

Experts say that as the technology matures and develops, carbon emissions will only get worse with time, as firms are trying to build even larger models because the technology has a scalable nature and its performance increases with size, which in turn is beneficial for business but costly for the environment.

Alex de Vires says that bigger models also lead to more energy requirements, which is not good for the environment. He is the founder of Digiconomist, and his firm’s research area is studying the environmental consequences of new technologies.

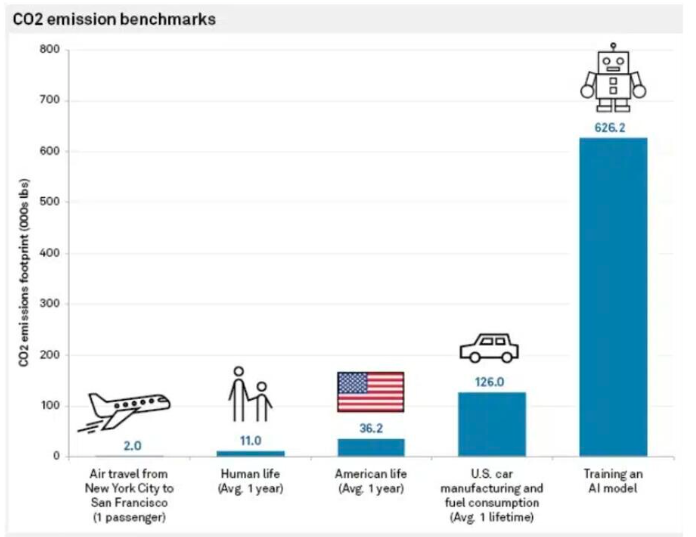

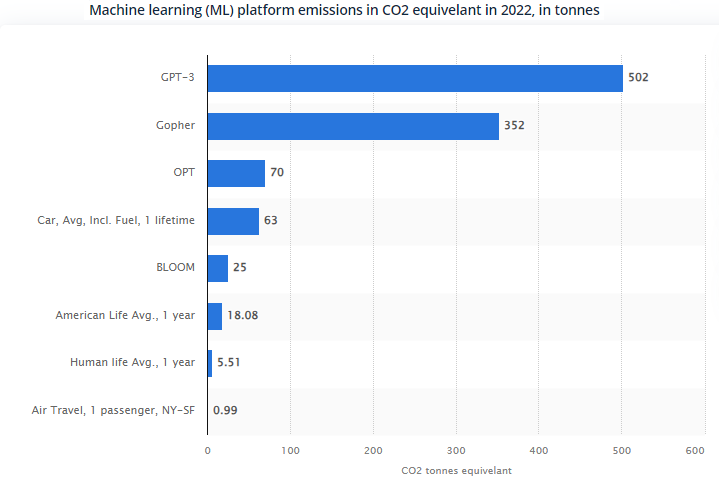

Training large-scale AI models, such as those that work at the back end of ChatGPT, consumes a lot of energy, as researchers have estimated over the years. David Petterson, who is a professor of computer science at UC Berkeley and the lead author of the research paper that he published back in 2021, wrote that the AI model GPT-3, which was refined by OpenAI to be used as ChatGPT, apparently required 1287 megawatts of electricity for training. This amount of energy is enough to feed 123 average-sized American homes for an entire year.

Though we are talking about the world’s famous AI model here, which may be the largest of its time, many other models have also been developed after that, which also consume huge amounts of energy.

According to Hugging Face’s AI and climate lead, Sacha Luccioni, the Gopher model, which is a project of Google DeepMind that was announced in 2021, needed an estimated 1066 megawatt hours, as she mentioned in another research paper published in 2022.

The present generation model has an even bigger climate cost

But these are both a sort of previous generation of AI models and are considerably small by today’s standards. GPT-3’s successor, GPT-4, is considered 10 times larger than that, and training it required energy somewhere between 51 and 62 gigawatts, which, according to researcher Kasper Groes Albin Ludvigsen, is more than the collective need of 4600 American homes.

In the same way, Google now has a much bigger model than Gopher, which is called Gemini, and despite the fact that Google has not disclosed how much energy it requires, the calculation simply goes: the bigger you build, the more energy it requires.

These estimates were just for the development and training phases. There is another important point, as these models are made for use in real-world operations that require them to produce output based on their training, and producing that output in response to the requests of users also requires energy.

According to experts, ChatGPT’s response to 1000 queries requires it to consume 47 watts, which is like powering five normal LED bulbs for one hour. Now think how this all can add up rapidly, as we are talking about text-based responses only.

Experts argue that AI developers can lower their carbon footprint by using data centers that run on clean energy, as the carbon emissions of AI vary widely depending upon where it is stored and operates.

According to Bloomberg, the demand for electricity has already increased in recent times, and the added demand from these expanding data centers is also surpassing the deployment of renewable energy sources. So supply units across the globe are also delaying the termination of coal and natural gas power plants, which is also adding to the carbon footprint of AI.

The original story can be seen here.

From Zero to Web3 Pro: Your 90-Day Career Launch Plan