The hot topic these days is artificial intelligence and its impact on existing tech, arts, and literature, and now a new one if AI expression also has some sort of protection under the First Amendment. Researchers are trying to mimic human brain capabilities with AI from the very start of its evolution, like creativity, problem solving, and speech recognition. The first one is considered an exercise unique to the human brain only, while the latter two are in the approach of AI to some extent.

Chatbot Gemini generated controversial images

Basically, AI can be anything from a set of algorithms to a system that makes trillions of decisions on any given platform, like a company’s database setup or a social networking site, or it could be a chatbot. Later in February, Google said that it was suspending its chatbot, Gemini, and that it would not generate images of people. The reason was that Gemini created a buzz because it came up with some images that were of scenes that showed people of color in some scenes historically dominated by white people, and critics said if the company is over fixing the bot for the risk of bias, Google tweeted on X that,

“We’re already working to address recent issues with Gemini’s image generation feature. While we do this, we’re going to pause the image generation of people and will re-release an improved version soon.”

Source: Google.

We're already working to address recent issues with Gemini's image generation feature. While we do this, we're going to pause the image generation of people and will re-release an improved version soon. https://t.co/SLxYPGoqOZ

— Google Communications (@Google_Comms) February 22, 2024

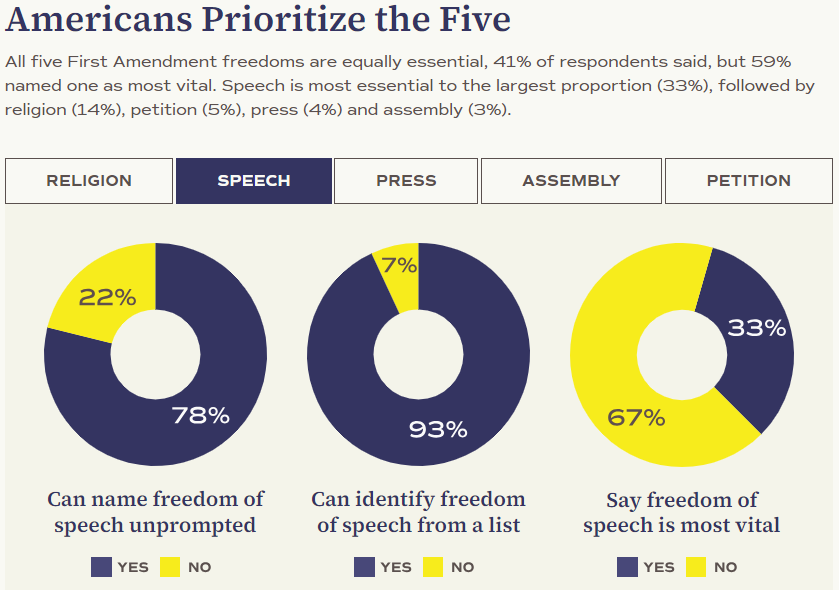

According to two senior scholars, Jordi Calvet-Bademunt from Vanderbilt University and Jacob Mchangama from Vanderbilt University, efforts to fight AI’s bias and discussion over its political leanings are important, but they raised another question that is often less discussed and is about the AI sector’s approach towards free speech.

Researchers evaluate AI’s free speech approach

The researchers also pointed out that if the industry’s approach to free speech is in accordance with international free speech standards, They said that their findings suggest that generative AI has critical flaws with respect to access to information and free expression.

During the research, they assessed the policies of six AI chatbots, including the major ones, Google Gemini and ChatGPT, by OpenAI. They noted that international human rights law should be a benchmark for these policies, but the actual use policies on companies sites regarding the hate speech and misinformation are too vague. Despite the fact that the international human rights law is not much protective of free speech.

According to them, companies like Google have too broad hate speech policies because, in case of such an event, Google bans content generation. Though hate speech is not desirable but having such broad and vague policies can also backfire. When researchers asked controversial questions regarding trans women’s participation in sports or European colonization, in more than 40% of cases, the chatbots refused to generate content. Like, all chatbots refused to answer questions opposing the transgender women’s participation, but many of them did support their participation.

Freedom of speech is a basic right of anyone in the US, but the vague policies rely on the moderators’ opinions, which are subjective about the understanding of hate speech. Experts noted that the policies of the larger companies will have a sizeable effect on people’s right to access information. And refusal to generate content can urge people to use chatbots that do generate content of hate, which would be a bad outcome.

The original research note can be seen here.

Land a High-Paying Web3 Job in 90 Days: The Ultimate Roadmap